Metzler Receives Grant to Advance Long-Range Imaging with Machine Learning

Atmospheric turbulence—aside from causing bumpy air travel—can also affect aerial imaging systems used for surveillance, astronomy and more. Long-range imaging can be especially difficult, with pictures often ending up distorted and of little use because of the chaotic flow of air between the camera and object being photographed.

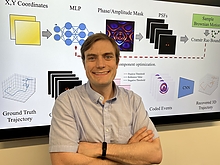

A University of Maryland expert in machine learning and computational imaging has just received a $360,000 grant from the Army Research Office (ARO) to address this challenge. Christopher Metzler, an assistant professor of computer science with an appointment in the University of Maryland Institute for Advanced Computer Studies (UMIACS), was awarded the funding through the ARO’s Early Career Program.

The award will support a three-year project to develop a system that uses high-speed cameras and machine learning algorithms to instantaneously create clearer images, even in areas of extreme turbulence.

“Regardless of how good your optics are, no matter how much you spend, you’re fundamentally limited by the atmosphere,” Metzler says. “When you have a big telescope, images are going to be blurry and distorted because of the atmosphere, and correcting for that is going to be a challenge.”

The current approach toward long-range imaging focuses on measuring the level of distortion, then gradually adjusting the optical system to compensate for the atmospheric interference, Metzler says.

But these types of systems are very difficult to use when trying to capture an object moving at high speeds. “By the time you look at it and try to compensate for the turbulence, the atmospheric conditions may have changed,” Metzler explains.

To overcome this, Metzler—working with his graduate students and others—is using neuromorphic cameras, also known as event cameras, which are triggered by movement and overall changes in intensity to capture data under a variety of conditions.

Traditional cameras used for long-range imaging can capture visuals at a rate of a hundred frames per second. The neuromorphic cameras can capture 10,000 frames per second, while using relatively little power.

Processing this non-traditional data—when thousands of frames are coming in every second—is an extremely unique challenge, one that Metzler describes as the project’s “primary hurdle.”

The raw neuromorphic data is fundamentally different, he says, and looks nothing like an image, with no existing mechanisms to process the data effectively.

Metzler plans to design novel machine learning algorithms to process the data, as well as unique optical hardware that can capture more diverse measurements filled with more information.

One of Metzler’s graduate students, computer science doctoral student Sachin Shah, will contribute heavily to the ARO-funded research. He is the lead author on a paper describing some of the strategies the researchers expect to deploy, and he recently presented the work at the IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR) held in Seattle.

“It is rewarding to see our theoretical insights lead to tangible performance improvement,” says Shah. “This project is unlocking fundamentally new sensing capabilities by combining next-generation hardware with cutting-edge algorithms.”

Also assisting on the project is Matthew Ziemann, a physicist at the U.S. Army Research Laboratory who is also studying for his Ph.D. at Maryland under the guidance of Metzler.

Metzler says he expects that when the ARO project is completed, the U.S. Army will be able to effectively use the technology in areas like surveillance, aircraft communication and space debris monitoring.

“At the end, we’ll have a powerful approach for imaging through optical aberrations at tens of thousands of frames per seconds,” he says.

—Story by Shaun Chornobroff, UMIACS communications group